What's the Hardest Part of Doing Live VR?

What’s the hardest part of doing great quality live VR distribution? It’s not where you think it might be. (And 5G is not necessarily the answer).

As Tiledmedia’s networker-in-chief, I talk to a lot of people that want to distribute live VR, at high quality. They find us through a Google search or get referred by an industry-insider. Sometimes they have an entire end-to-end chain in place and sometimes they only have a dream. I love these discussions; they teach me where the VR world is headed and they drive our product roadmap. We usually talk through the entire chain, from production to consumption. And invariably we hit that bottleneck that we need to resolve before we can do our first test. A hint: it’s not very VR-specific.

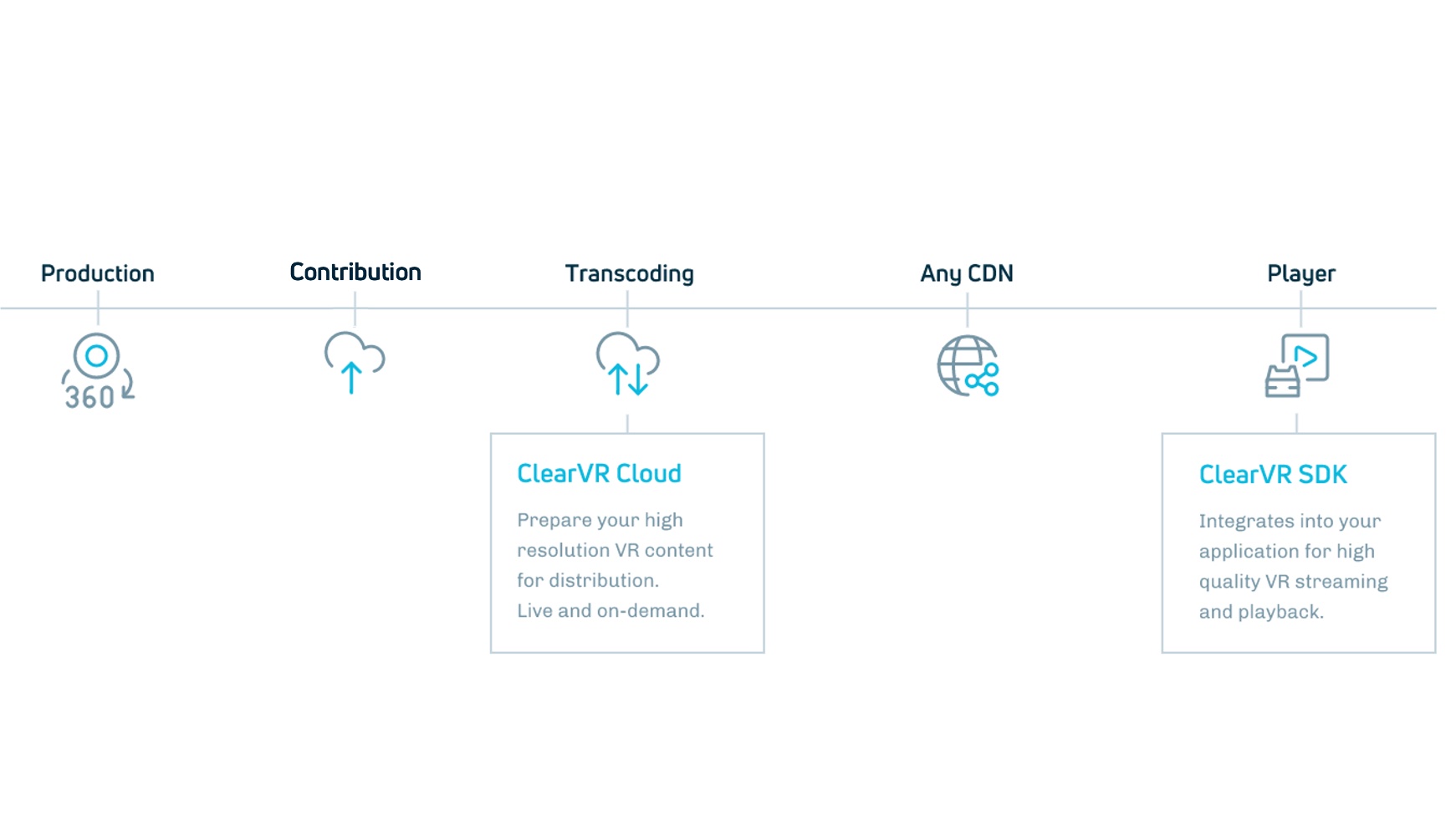

A high-quality VR distribution chain requires a lot of elements to come together. A great production, a solid distribution system, and a user-friendly application come to mind. Let’s see about critical factors for each of these elements.

No Zooming in a Headset

In production, camera location is everything. There is no zooming in a VR headset, so if you are too far from the action, the user experience will be poor. One technical element that always receives much attention is stitching. That’s fusing the input of multiple imaging sensors into a single “Equirectangular Projection”, or ERP. Yes, that’s hard, even a black art. But solutions exist, also for live productions. Some cameras output a stitched ERP. External software, like Voysys, can also do the trick.

Production? Check.

The same goes for distribution. Indeed, an 8K VR distribution requires quite a bit of bandwidth and how do you even decode it on a device with a 4K decoder? This is what ClearVR enables. The bandwidth is very reasonable, even at 8K or higher still, and by decoding only the viewport you can get away with a 4K decoder and still have 8K quality. The distribution can rely on everyday http streaming which works on any modern CDN, so it’s extremely scalable.

Distribution? Check.

The app is not the bottleneck either. You can build one yourself, or have one built for you. Several suppliers can make a great app, whether in Unity on a headset, or a native app on a mobile device. A party like Cosm can add social functionality where your users can voice-chat about what they are seeing unfold from their virtual skybox (and ClearVR will keep them in sync).

Consumption? Check.

Getting that Feed out of the Camera

So if we have production, distribution and consumption covered, where is that bottleneck I am talking about?

Well, it’s the contribution.

Getting a stable upstream video feed out from where your camera sits is surprisingly hard. Contributing your feeds into a cloud transcoder requires significant bandwidth. Let’s look at our ClearVR Cloud platform. It will transcode the content; and to do that it will decode the full 360 or 180 picture, cut it in tiles, and then re-encode these tiles. While such a transcoding step (decoding/re-encoding) is present in every internet distribution system, coding artefacts will show up extra-large in a VR headset.

This means that the quality of the contribution feed is even more important than it normally already is. The video coming into ClearVR Cloud must be visually lossless. A high quality 8K contribution feed (say, 8192 x 4096 pixels) usually requires north of 100 Mbit/s, depending on the subject matter. And that’s per camera, where many productions have 4 or more VR cameras. A stable, fast connection is required, as is a stable and error-resilient contribution protocol.

I have seen fixed connections fail, and the “open Internet” obviously has its challenges. Together with our partners we always manage to get it to work, but it can take a few iterations and it always requires testing.

Oh, and forget about transcoding on-premise. It requires a lot of on-site hardware and it only increases your outgoing bandwidth. That’s true for a tiled transcode, which produces quite a few renditions to keep user bandwidth the lowest, and it’s equally true for an adaptive-bitrate “legacy” transcode that distributes the entire sphere instead of just the viewport.

5G to the Rescue?

Mobile contribution seems like a great solution because it allows the VR camera to be anywhere, without depending on any cables. I talk to a lot of people that want to do virtual tourism, and they really like this option. Many of them think that 5G will provide that magic connectivity, with boundless bandwidth.

Take a mobile VR camera like the Kandao QooCam Enterprise which streams live 8K output over RTMP. Hook it up to a mobile 5G modem and off you go. I would agree in theory, but the practice is (still) different.

We have worked with customers and partners in the largest cities in the world, where the most advanced 5G networks are being deployed. We turn on the platform, give them an RTMP link for pushing their stream and wait. They do a speedtest and they are happy to see 300 Mbit/s.

Yes, sure, but that’s downstream bandwidth.

Upstream is usually not nearly as much. Maybe 30 Mbps will work sort-of-reliably. Maybe. If we’re really lucky, we get 60 Mbps, but the camera and modem need to be outside. And that’s with a stationary camera; forget about walking around. (Which is actually a relief for headset users – a camera on a backpack or even worse, a bicycle, is a great way to get your users motion-sick : )

That doesn’t mean 5G is useless – quite to the contrary. It’s great to see it being deployed. There is a lot of demand for doing live production on location, and there is no doubt 5G will help. Speeds will increase, coverage will improve. Bonded mobile channel contribution services (e.g., Mobile Viewpoint’s) can use 5G to improve connectivity speeds. Guaranteed-bandwidth services (5G slicing) may become available at some point. Yes, “may” – guaranteed-bandwidth cellular services have been announced forever; the required frequency management may make slicing hairy and/or expensive. Perhaps when cells get much smaller, but even then.

Anyway, 5G will help, and it will take time.

A Reliable Protocol

Next to speed, reliability is a thing. Several contribution protocols exist, and they do make a difference. Cameras often conveniently support RTMP, and we support it too. But the most convenient choice is not always the best. If you have a very reliable connection, sure, choose RTMP. Over mobile networks I would rather advice SRT: Secure, Reliable Transport. ClearVR Cloud handles both.

On fixed wired networks, we usually see customers rely on HLS Pull, where ClearVR Cloud pulls media segments from a server. The server can be on-site, or it might be at the customer’s edge, e.g. in its Broadcast Center or Master Control Room. They have dedicated lines in place between a venue (e.g. a football stadium) and their broadcast facility.

ClearVR Cloud also supports HLS Push where our partner pushes the media to ClearVR Cloud, but we prefer using HLS Pull. Why? We can use multiple parallel TCP connections to increase effective throughput and reduce chances of head-of-line-blocking; we can pull with multiple clients at once to increase redundancy and support failover; we can pull faster than real-time during startup to establish a buffer, … but now I am going into the weeds.

Illegal Bitstreams

Contribution is not just about sheer bandwidth and reliability – it’s more than just the transport. Coding and packaging provide another challenge. Contribution encoders sometimes do weird things, and the same goes for live packagers. Producing legal (standards-compliant) bitstreams can be hard, especially when it comes to timestamps. And these timestamps are very important if we want give users seamless camera switches that don’t make them jump all over the event timeline.

But I understand: our partners need to be able to work with the tools they can get. We can’t demand that they rewrite their encoder or their HLS packager. So over time, we have made ClearVR Cloud very robust in accepting a wide variety of legal and semi-legal input streams.

As we keep encountering new quirks, we keep adding new code paths to cope with them. Quirks that include time stamps rounded to seconds where they really shouldn’t have been, weird and varying segment sizes, audio that goes missing, and more. Or feeds that completely disappear temporarily and then come back again. We have taught ClearVR Cloud how to deal with these bitstream flaws, and how to auto-reconnect after camera or contribution hiccups.

(While I am at it, similar robustness has been added to our ClearVR SDK. In the run-up to the Tokyo Olympics, we have greatly expanded the range of bitstreams that the ClearVR SDK can consume. Compliant as well as not-really-compliant ones. The ClearVR SDK is used in the Olympics VR apps, for mobile devices and headsets, handling all VR and non-VR streams. But I digress.)

Magic Bullets

My message is really this: while there are no magic bullets, great quality live VR is absolutely possible with proper planning, preparation and pre-event testing. This applies to fixed as well as mobile contribution. Working closely with partners like PCCW, we have successfully distributed events in 8K VR, live, using contribution over 5G. We can make it work.

All that’s needed is some planning and preparation. And isn’t that the standard for any professional video production?

Rob (please contact me rob at tiledmedia dot com if this post triggers a conversation)

July 22, 2021

Tech

Blogs

Author

Rob Koenen

Stay tuned!